Application notes

Technical notes

Ask an engineer

Publications

United States (EN)

Select your region or country.

LiDAR: A photonics guide to the autonomous vehicle market

Slawomir Piatek, PhD, Hamamatsu Corporation & New Jersey Institute of Technology

Jake Li, Hamamatsu Corporation

November 18, 2017

LiDAR vs. competing sensor technologies (camera, radar, and ultrasonic) reinforces the need for sensor fusion, as well as careful selection of photodetectors, light sources, and MEMS mirrors.

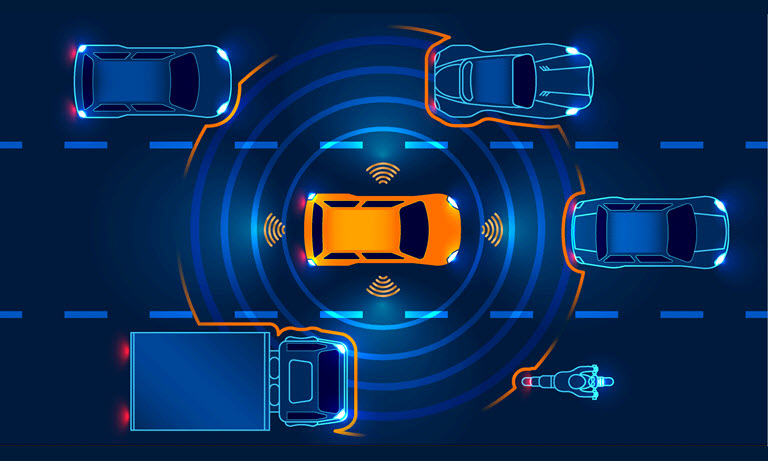

Advances in sensor technology, imaging, radar, light detection and ranging (LiDAR), electronics, and artificial intelligence have enabled dozens of advanced driver assistance systems (ADAS), including collision avoidance, blindspot monitoring, lane departure warning, or park assist. Synchronizing the operation of such systems through sensor fusion allows fully autonomous or self-driving vehicles to monitor their surroundings and warn drivers of potential road hazards, or even take evasive actions independent of the driver to prevent collision.

Autonomous vehicles must also differentiate and recognize objects ahead at high-speed conditions. Using distance-gauging technology, these self-driving cars must rapidly construct a three-dimensional (3D) map up to a distance of about 100 m, as well as create high-angular-resolution imagery at distances up to 250 m. And if the driver is not present, the artificial intelligence of the vehicle must make optimal decisions.

One of several basic approaches for this task measures round-trip time of flight (ToF) of a pulse of energy traveling from the autonomous vehicle to the target and back to the vehicle. Distance to the reflection point can be calculated when one knows the speed of the pulse through the air—a pulse that can be ultrasound (sonar), radio wave (radar), or light (LiDAR).

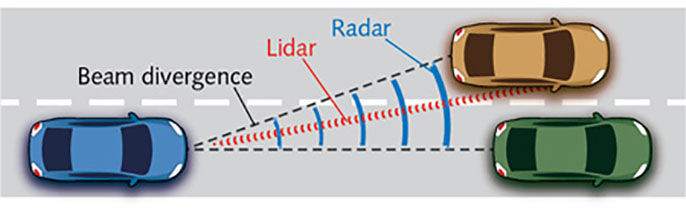

Of these three ToF techniques, LiDAR is the best choice to provide higher-angular-resolution imagery because its smaller diffraction (beam divergence) allows better recognition of adjacent objects compared to radar (see Figure 1). This higher-angular-resolution is especially important at high speed to provide enough time to respond to a potential hazard such as head-on collision.

Figure 1. Beam divergence depends on the ratio of the wavelength and aperture diameter of the emitting antenna (radar) or lens (LiDAR). This ratio is larger for radar producing larger beam divergence and, therefore, smaller angular resolution. In the figure, the radar (black) would not be able to differentiate between the two cars, while LiDAR (red) would.

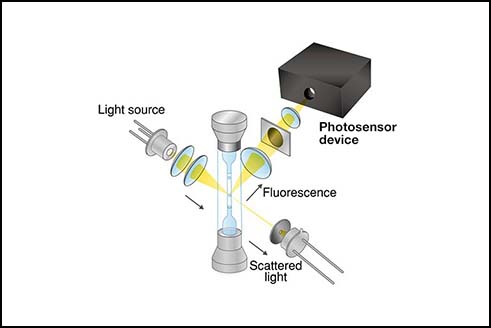

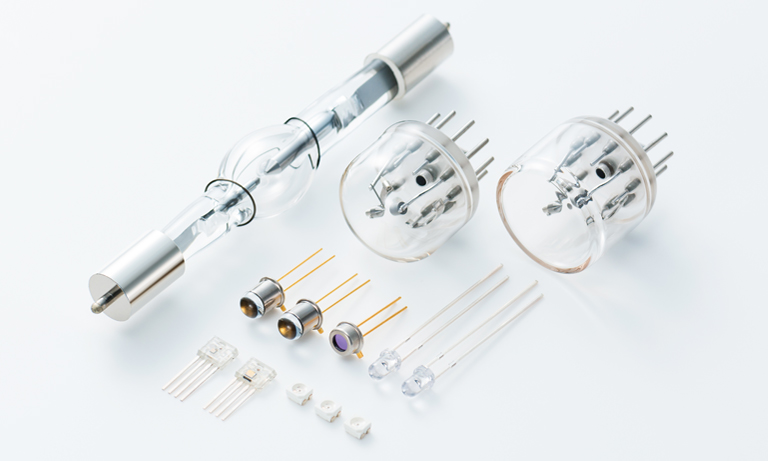

Laser source selection

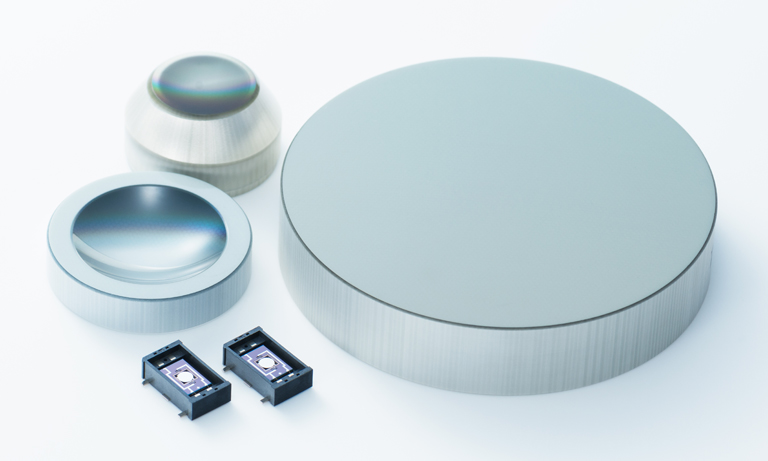

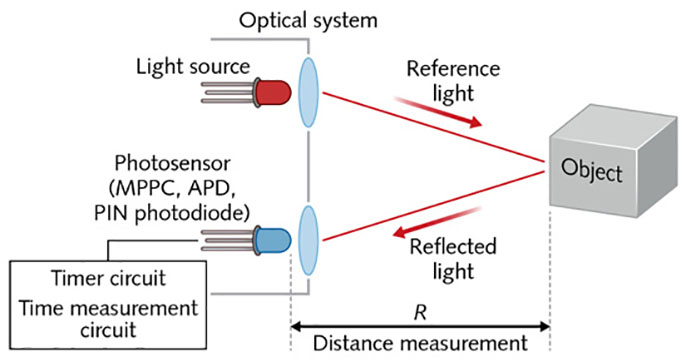

In ToF LiDAR, a laser emits a pulse of light of duration τ that activates the internal clock in a timing circuit at the instant of emission (see Figure 2). The reflected light pulse from the target reaches a photodetector, producing an electrical output that deactivates the clock. This electronically measured round-trip ToF Δt allows calculation of the distance R to the reflection point.

Figure 2. The basic setup for time-of-flight (ToF) LiDAR is detailed.

If the laser and photodetector are practically at the same location, the distance is given by:

Equation 1

where c is the speed of light in vacuum and n is the index of refraction of the propagation medium (for air, approximately 1). Two factors affect the distance resolution ΔR: the uncertainty δΔt in measuring Δt and the spatial width w of the pulse (w = cτ), if the diameter of the laser spot is larger than the size of the target feature to be resolved.

The first factor implies ΔR = ½cδΔt, whereas the second implies ΔR = ½ w = ½ cτ. If the distance is to be measured with a resolution of 5 cm, the above relations separately imply that δΔt is approximately 300 ps and τ is approximately 300 ps. Time-of-flight LiDAR requires photodetectors and detection electronics with small time jitter (the main contributor to δΔt) and lasers capable of emitting short-duration pulses, such as relatively expensive picosecond lasers. A laser in a typical automotive LiDAR system produces pulses of about 4 ns duration, so minimal beam divergence is essential.

One of the most critical choices for automotive LiDAR system designers is the light wavelength. Several factors constrain this choice: safety to human vision, interaction with the atmosphere, availability of lasers, and availability of photodetectors. The two most popular wavelengths are 905 and 1550 nm, with the primary advantage of 905 nm being that silicon absorbs photons at this wavelength and silicon-based photodetectors are generally less expensive than the indium gallium arsenide (InGaAs) infrared (IR) photodetectors needed to detect 1550 nm light. However, the higher human-vision safety of 1550 nm allows the use of lasers with a larger radiant energy per pulse—an important factor in the photon budget.

Atmospheric attenuation (under all weather conditions), scattering from airborne particles, and reflectance from target surfaces are wavelength-dependent. This is a complex issue for automotive LiDAR because of the myriad of possible weather conditions and types of reflecting surfaces. Under most realistic settings, loss of light at 905 nm is less because water absorption is stronger at 1550 nm than at 905 nm.1

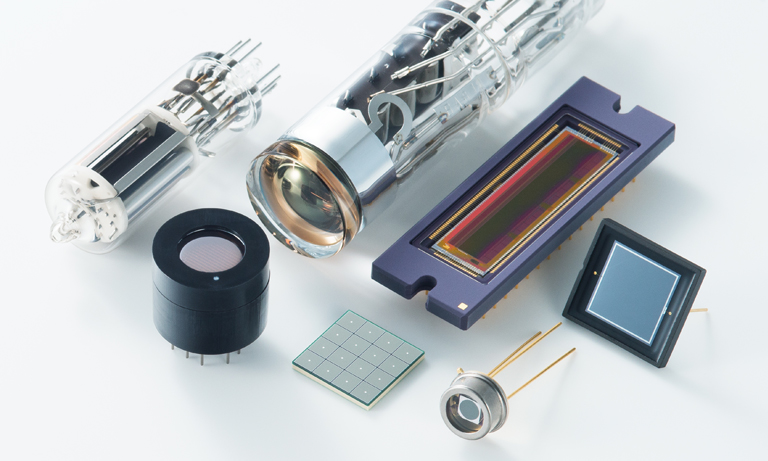

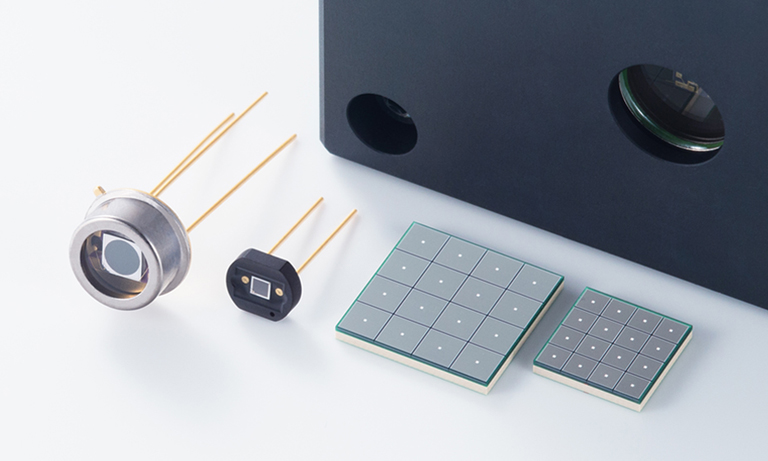

Photon detection options

Only a small fraction of photons emitted in a pulse ever reach the active area of the photodetector. If the atmospheric attenuation does not vary along the pulse's path, the beam divergence of the laser light is negligible, the illumination spot is smaller than the target, the angle of incidence is zero, and the reflection is Lambertian, then the optical received peak power P(R) is:

Equation 2

where P0 is the optical peak power of the emitted laser pulse, ρ is the reflectivity of the target, A0 is the receiver's aperture area, η0 is the detection optics' spectral transmission, and γ is the atmospheric extinction coefficient.

This equation shows that the received power rapidly decreases with increasing distance R. For a reasonable choice of the parameters and R = 100 m, the number of returning photons on the detector's active area is on the order of a few hundred to a few thousand from the more than 1020 typically emitted. These photons compete for detection with background photons carrying no useful information.

Using a narrowband filter can reduce the amount of background light reaching the detector, but the amount cannot be reduced to zero. The effect of the background is the reduction of the detection dynamic range and higher noise (background photon shot noise). It's noteworthy that the terrestrial solar irradiance under typical conditions is less at 1550 nm than at 905 nm.

Creating a 3D map in a full 360° × 20° strip surrounding a car requires a raster-scanned laser beam or multiple beams, or flooding the scene with light and gathering a point cloud of data returns. The former approach is known as scanning LiDAR and the latter as flash LiDAR.

There are several approaches to scanning LiDAR. In the first, exemplified by Velodyne (San Jose, CA), the roof-mounted LiDAR platform rotates at 300-900 rpm while emitting pulses from sixty-four 905 nm laser diodes. Each beam has a dedicated avalanche photodiode (APD) detector. A similar approach uses a rotating multi-faceted mirror with each facet at a slightly different tilt angle to steer a single beam of pulses in different azimuthal and declinational angles. The moving parts in both designs represent a failure risk in mechanically rough driving environments.

The second, more compact approach to scanning LiDAR uses a tiny microelectromechanical systems (MEMS) mirror to electrically steer a beam or beams in a 2D orientation. Although technically there are still moving parts (oscillating mirrors), the amplitude of the oscillation is small and the frequency is high enough to prevent mechanical resonances between the MEMS mirror and the car. However, the confined geometry of the mirror constrains its oscillation amplitude, which translates into limited field of view—a disadvantage of this MEMS approach. Nevertheless, this method is gaining interest because of its low cost and proven technology.

Optical phased array (OPA) technology, the third competing scanning LiDAR technique, is gaining popularity for its reliable, "no-moving-parts" design. It consists of arrays of optical antenna elements that are equally illuminated by coherent light. Beam steering is achieved by independently controlling the phase and amplitude of the re-emitted light by each element, and far-field interference produces a desired illumination pattern from a single beam to multiple beams. Unfortunately, light loss in the various OPA components restricts the usable range.

Flash LiDAR floods the scene with light, though the illumination region matches the field of view of the detector. The detector is an array of APDs at the focal plane of the detection optics. Each APD independently measures ToF to the target feature imaged on that APD. This is a truly "no-moving-parts" approach where the tangential resolution is limited by the pixel size of the 2D detector.

The major disadvantage of flash LiDAR, however, is photon budget: once the distance is more than a few tens of meters, the amount of returning light is too small for reliable detection. The budget can be improved at the expense of tangential resolution if instead of flooding the scene with photons, structured light—a grid of points—illuminates it. Vertical-cavity surface-emitting lasers (VCSELs) make it possible to create projectors emitting thousands of beams simultaneously in different directions.

Beyond time–of–flight limitations

Time-of-flight LiDAR is susceptible to noise because of the weakness of the returned pulses and wide bandwidth of the detection electronics, and threshold triggering can produce errors in measurement of Δt. For these reasons, frequency-modulated continuous-wave (FMCW) LiDAR is an interesting alternative.

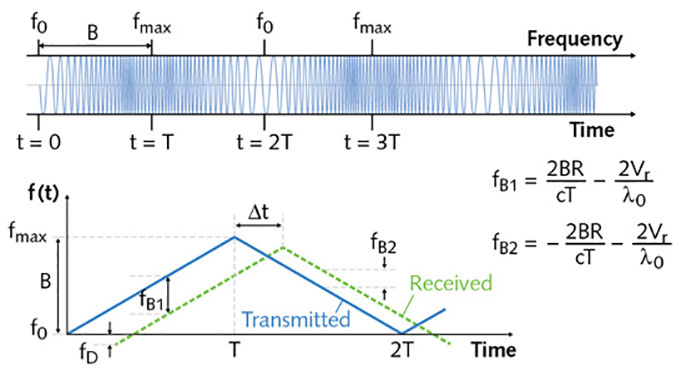

In FMCW radar, or chirped radar, the antenna continuously emits radio waves whose frequency is modulated—for example, linearly increasing from f0 to fmax over time T and then linearly decreasing from fmax to f0 over time T. If the wave reflects from a moving object at some distance and comes back to the emission point, its instantaneous frequency will differ from the one being emitted at that instant. The difference is because of two factors: the distance to the object and its relative radial velocity. One can electronically measure the frequency difference and simultaneously calculate the object's distance and velocity (see Figure 3).

Figure 3. In chirped radar, by electronically measuring fB1 and fB2 one can determine the distance to the reflecting object and its radial speed.

Inspired by chirped radar, FMCW LiDAR can be approached in different ways. In the simplest design, one can chirp-modulate the intensity of the beam of light that illuminates the target. This frequency is subject to the same laws (such as the Doppler effect) as the carrier frequency in FMCW radar. The returned light is detected by a photodetector to recover modulation frequency. The output is amplified and mixed in with the local oscillator allowing the measurement of frequency shift and from that, the calculation of distance to and speed of the target.

But FMCW LiDAR has some limitations. Compared to a ToF LiDAR, it requires more computational power and, therefore, is slower in generating a full 3D surround view. In addition, the accuracy of the measurements is very sensitive to linearity of the chirp ramp.

Although designing a functional LiDAR system is challenging, none of these challenges are insurmountable. As the research continues, we are getting closer to the time when the majority of cars driving off the assembly line will be fully autonomous.

Reference

- J. Wojtanowski et al., Opto-Electron. Rev., 22, 3, 183-190 (2014).

Note

A version of this article was published in the November 2017 issue of Laser Focus World.

- Confirmation

-

It looks like you're in the . If this is not your location, please select the correct region or country below.

You're headed to Hamamatsu Photonics website for US (English). If you want to view an other country's site, the optimized information will be provided by selecting options below.

In order to use this website comfortably, we use cookies. For cookie details please see our cookie policy.

- Cookie Policy

-

This website or its third-party tools use cookies, which are necessary to its functioning and required to achieve the purposes illustrated in this cookie policy. By closing the cookie warning banner, scrolling the page, clicking a link or continuing to browse otherwise, you agree to the use of cookies.

Hamamatsu uses cookies in order to enhance your experience on our website and ensure that our website functions.

You can visit this page at any time to learn more about cookies, get the most up to date information on how we use cookies and manage your cookie settings. We will not use cookies for any purpose other than the ones stated, but please note that we reserve the right to update our cookies.

1. What are cookies?

For modern websites to work according to visitor’s expectations, they need to collect certain basic information about visitors. To do this, a site will create small text files which are placed on visitor’s devices (computer or mobile) - these files are known as cookies when you access a website. Cookies are used in order to make websites function and work efficiently. Cookies are uniquely assigned to each visitor and can only be read by a web server in the domain that issued the cookie to the visitor. Cookies cannot be used to run programs or deliver viruses to a visitor’s device.

Cookies do various jobs which make the visitor’s experience of the internet much smoother and more interactive. For instance, cookies are used to remember the visitor’s preferences on sites they visit often, to remember language preference and to help navigate between pages more efficiently. Much, though not all, of the data collected is anonymous, though some of it is designed to detect browsing patterns and approximate geographical location to improve the visitor experience.

Certain type of cookies may require the data subject’s consent before storing them on the computer.

2. What are the different types of cookies?

This website uses two types of cookies:

- First party cookies. For our website, the first party cookies are controlled and maintained by Hamamatsu. No other parties have access to these cookies.

- Third party cookies. These cookies are implemented by organizations outside Hamamatsu. We do not have access to the data in these cookies, but we use these cookies to improve the overall website experience.

3. How do we use cookies?

This website uses cookies for following purposes:

- Certain cookies are necessary for our website to function. These are strictly necessary cookies and are required to enable website access, support navigation or provide relevant content. These cookies direct you to the correct region or country, and support security and ecommerce. Strictly necessary cookies also enforce your privacy preferences. Without these strictly necessary cookies, much of our website will not function.

- Analytics cookies are used to track website usage. This data enables us to improve our website usability, performance and website administration. In our analytics cookies, we do not store any personal identifying information.

- Functionality cookies. These are used to recognize you when you return to our website. This enables us to personalize our content for you, greet you by name and remember your preferences (for example, your choice of language or region).

- These cookies record your visit to our website, the pages you have visited and the links you have followed. We will use this information to make our website and the advertising displayed on it more relevant to your interests. We may also share this information with third parties for this purpose.

Cookies help us help you. Through the use of cookies, we learn what is important to our visitors and we develop and enhance website content and functionality to support your experience. Much of our website can be accessed if cookies are disabled, however certain website functions may not work. And, we believe your current and future visits will be enhanced if cookies are enabled.

4. Which cookies do we use?

There are two ways to manage cookie preferences.

- You can set your cookie preferences on your device or in your browser.

- You can set your cookie preferences at the website level.

If you don’t want to receive cookies, you can modify your browser so that it notifies you when cookies are sent to it or you can refuse cookies altogether. You can also delete cookies that have already been set.

If you wish to restrict or block web browser cookies which are set on your device then you can do this through your browser settings; the Help function within your browser should tell you how. Alternatively, you may wish to visit www.aboutcookies.org, which contains comprehensive information on how to do this on a wide variety of desktop browsers.

5. What are Internet tags and how do we use them with cookies?

Occasionally, we may use internet tags (also known as action tags, single-pixel GIFs, clear GIFs, invisible GIFs and 1-by-1 GIFs) at this site and may deploy these tags/cookies through a third-party advertising partner or a web analytical service partner which may be located and store the respective information (including your IP-address) in a foreign country. These tags/cookies are placed on both online advertisements that bring users to this site and on different pages of this site. We use this technology to measure the visitors' responses to our sites and the effectiveness of our advertising campaigns (including how many times a page is opened and which information is consulted) as well as to evaluate your use of this website. The third-party partner or the web analytical service partner may be able to collect data about visitors to our and other sites because of these internet tags/cookies, may compose reports regarding the website’s activity for us and may provide further services which are related to the use of the website and the internet. They may provide such information to other parties if there is a legal requirement that they do so, or if they hire the other parties to process information on their behalf.

If you would like more information about web tags and cookies associated with on-line advertising or to opt-out of third-party collection of this information, please visit the Network Advertising Initiative website http://www.networkadvertising.org.

6. Analytics and Advertisement Cookies

We use third-party cookies (such as Google Analytics) to track visitors on our website, to get reports about how visitors use the website and to inform, optimize and serve ads based on someone's past visits to our website.

You may opt-out of Google Analytics cookies by the websites provided by Google:

https://tools.google.com/dlpage/gaoptout?hl=en

As provided in this Privacy Policy (Article 5), you can learn more about opt-out cookies by the website provided by Network Advertising Initiative:

http://www.networkadvertising.org

We inform you that in such case you will not be able to wholly use all functions of our website.

Close